The two internets: human web and agentic web

We are at a point in internet history where we still have the human web, the one we are using right now to interact with websites. But we also have the agentic web, which is the internet of agents.

Even in a typical online meeting, half of the “participants” may not be humans. Note-takers, meeting assistants, AI summarizers; all of them are agents already reading your content and your transcripts. At the same time, LLMs are releasing their own browsers, full of bots that crawl and retrieve content.

From a B2B marketing perspective, this means:

- Your website is now being read by humans, Google, and a growing family of LLM bots.

- These bots use your content differently. They use it to train models, to index your pages, understand your business, and to retrieve fresh data during live conversations.

- Your visibility is no longer just about ranking number one, but now also being surfaced and cited in AI-driven experiences.

Planning for AI search in 2026 is about understanding this new reality and making sure your brand is visible, readable, and correctly positioned in both webs.

Why AI search belongs in your 2026 budget

For years, SEO has been the channel where you rank number one and harvest the fruit from Google search. Now, with AI overviews sitting on top of results and users clicking less, that model is breaking.

A few things are happening at once:

- AI overviews on at least 60% of queries. On a large share of search results, AI-generated answers, expandable sections, callouts, and videos push organic links far down the page. On mobile, ranking number one often sits deep below the fold.

- Most educated B2B buyers use LLMs. In tech and SaaS, more and more buyers go to ChatGPT, Perplexity, and Gemini for research and they trust what LLMs surface and cite.

- Two visibility layers. LLMs have their own memory of your brand from model training, and they also peek at live sources. They don’t go very deep because it costs resources. Therefore, they surface the most visible, trusted mentions, compare them with their memory, and then blend them into answers.

The new reality is that you need to plan strategically for being visible, found, and cited in the right way in this new medium. That’s why AI visibility and AI search tools deserve their own line items in your 2026 budget.

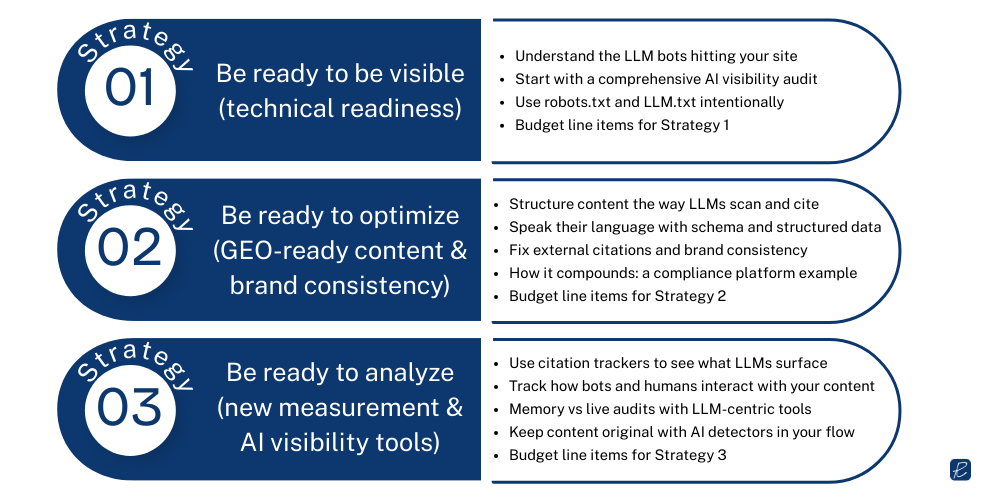

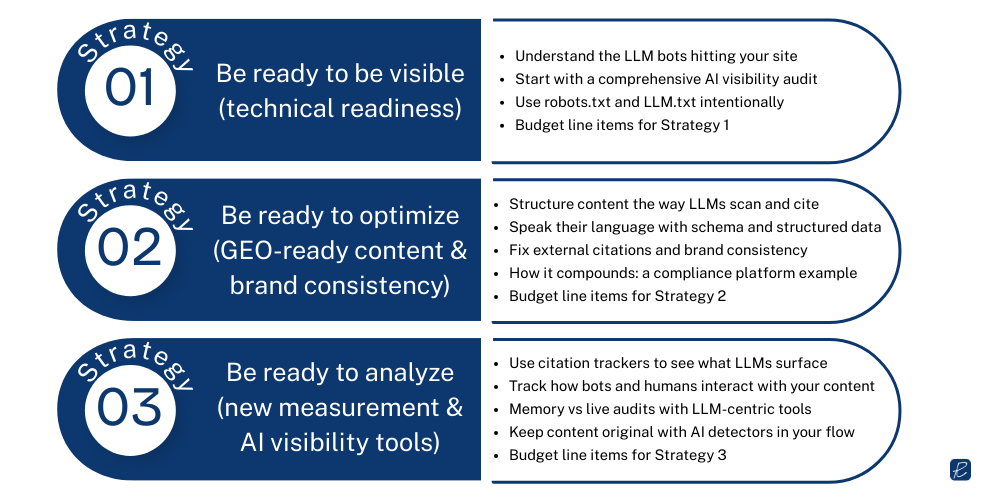

Strategy 1: Be ready to be visible (technical readiness)

Strategy number one is visibility itself. LLMs are still machines, and the way they discover your brand’s content is very similar to how search engines do it, with one key difference in how they use your data.

Understand the LLM bots hitting your site

There are three main types of LLM-related bots:

- Indexer bots: very similar to Googlebot. They discover and index your content.

- Training bots: they grab content from your website for model training purposes.

- Retrieval bots: they visit your website during a live conversation to grab fresh information and augment the model’s memory-based data.

Retrieval bots are the ones that show up when a buyer asks a question in ChatGPT or Perplexity and the model needs an up-to-date answer. If they can’t easily read your content, you’re out of the conversation.

Treat these bots as a new class of high-value “visitors” you can influence with technical decisions.

Start with a comprehensive AI visibility audit

Before you invest, you need to understand where you stand:

What can LLM bots actually access on your site?

Are there parts blocked by robots.txt, JavaScript frameworks, or infrastructure choices?

Which content types are most visible right now?

Are there technical enhancements needed on the engineering side?

A good AI visibility audit looks at technical readability and crawlability (for both search engine bots and LLM bots). It also checks whether your current setup supports the next steps: schemas, LLM-specific files, and content structures that models can scan and cite.

Use robots.txt and LLM.txt intentionally

One of the new tactics you can implement is optimizing your content specifically for LLMs. Alongside robots.txt you can now have LLM.txt. This is a file in the root folder of your domain that LLMs pick up as a source of information about your brand.

You can:

- Declare what your brand does in language that’s easy for models to reuse.

- Point LLMs to specific sections of your site you want them to prioritize.

- Keep sensitive areas out while still exposing high-value knowledge.

You can also create website copy that is accessible only for LLM bots and help them understand your content better without changing what humans see.

All of this requires engineering support. LLM.txt syntaxes, bot management, and the technical infrastructure around your content need developer time. It is wise to plan for this, because optimizing for LLMs currently is not a pure content-only exercise.

Budget line items for Strategy 1

When you think about 2026 planning, Strategy 1 usually includes:

Strategy 2: Be ready to optimize (GEO-ready content & brand consistency)

We are not just writing for humans anymore. We are not just optimizing for search engine bots. We also need to keep in mind the new actors that will be reading our content: LLMs.

This is where Generative Engine Optimization (GEO) and content readiness come in.

Structure content the way LLMs scan and cite

The principles we use in GEO overlap with Google’s content best practices but they are not identical. LLMs scan and grade content differently. Some structures matter more:

- Bottom line upfront. Clear definitions and summaries at the top of a page.

- FAQ sections. Short, direct answers to very specific questions.

- Trust and expertise signals. Named authors, citations, and clear expertise behind the content.

If your content is not structured well, if it is not optimized for machines to quickly scan, understand, and cite it, then you are in trouble.

What we see working well for B2B brands:

- Content refresh cycles instead of one-time optimization.

- Enhancing existing content with new facts, research, citations, and trust factors.

- Making sure LLM-friendly sections (definitions, FAQs, bottom line upfront) are consistently present on key pages.

Speak their language with schema and structured data

During the visibility phase, you can implement schema on your website so you have the technical ability to support it. In the readiness phase, you need to optimize every single part of the schema or tag you have.

In other words, speak their language.

If LLMs like reading structured data in schema format (and we know they do), squeeze every single bit out of available schema types on every page that is important to your business. Organization, Article, Breadcrumb, Product, FAQ: technically all of them can be useful if applied correctly.

We have already seen better third-party metrics and better visibility for pages that use extensive schema parameters compared to those that do not.

Fix external citations and brand consistency

If you manage a brand with a large digital footprint, especially after repositioning, mergers, or brand name changes, LLMs see a very diverse set of signals.

They know something about you from model training. They also check live sources, but they don’t go too deep because it is tedious and costs them resources. They surface highly visible platforms and use them to blend answers and recommendations.

That is why brand consistency across highly visible sources is critical.

Typical gaps:

- LinkedIn company pages that haven’t been updated in more than a year.

- “About us” pages that don’t reflect your current positioning.

- Old descriptions on review platforms like G2, Capterra, or similar.

- Social media bios that still talk about your previous focus.

If those pages are outdated, models will keep presenting you in the wrong way, even if your main site is up to date.

How it compounds: a compliance platform example

A good illustration of Strategy 2 in action is a B2B compliance platform that came after a migration from one domain to another and a complete brand name change.

They operate in a highly competitive compliance space. The way we helped them was focused on making sure their content was optimized for GEO:

- Implementing LLM-readable structures and schemas.

- Applying GEO-focused content optimizations across key pages.

- Reinforcing brand consistency and external citations.

- Continuing updates as AI overviews evolved.

We started working on their content when AI overviews were first released and continued over more than a year. Their AI overview appearances grew step by step and reached around 850 AI overviews and counting.

This is what consistent technical readiness, GEO principles, and brand consistency look like when they compound. You can read more about this project in our case studies section.

Budget line items for Strategy 2

For 2026, Strategy 2 typically includes:

- Content refresh cycles focused on GEO structures (BLUF, FAQs, definitions, trust).

- Schema implementation and maintenance across key pages.

- External profile clean-up and copy updates for LinkedIn, “About us”, social profiles, and review sites.

- A GEO-focused content optimization program with clear prioritization of pages and topics.

Strategy 3: Be ready to analyze (new measurement & AI visibility tools)

The third strategy is LLM visibility measurement. A lot of conversations are circling around this already, and the bigger the company or the faster the growth stage, the more important it is to navigate the new arena of tools and KPIs.

You need to plan for a separate line item for the new generation of measurement tools.

Use citation trackers to see what LLMs surface

One example is a citation tracker that shows:

- The citations you are getting.

- The most popular websites surfaced for the prompts you track.

- Which LinkedIn posts, newsletters, or pages are being surfaced by LLMs for your prompts.

- Which competitors appear alongside you.

This is not the same as keyword tracking for Google. For instance, the average length of a prompt in a conversation is around 20 words, and there is no way to predict the exact prompts your users type. Even if two people sit in one room and try to ask the “same” question, their prompts will differ.

So you have to treat these tools as a way to see macro trends, not precise rankings. They show:

- Which topics are resonating.

- What types of content are being surfaced for those topics.

- How your competitors show up in LLM answers.

This is already valuable when you decide what to publish, what to refresh, and where to double down.

Track how bots and humans interact with your content

On the bots side, the retrieval-training-indexer breakdown shows which pages are most popular among different LLM bots. That is a strong signal:

- If a page is frequently hit by retrieval bots, it is often being used in live answers.

- If training bots focus on a piece, that content is likely feeding future model updates.

You can reverse-engineer this:

- Look at the content that is most popular with bots.

- Improve it, enrich it, and include it more clearly in your planning.

- Use it as a template for new pieces in the same area.

On the human side, you should start isolating AI platform traffic into its own bucket:

- First-party traffic from ChatGPT, Perplexity, Gemini, and similar.

- Landing pages for that traffic.

- Customer or user journeys from those landings.

- Micro conversions and conversions.

- Activity in CRM (HubSpot, Salesforce) that’s associated with those sessions.

We usually see AI-sourced traffic closer to the bottom of the funnel. Any CRM activity report can show the role and impact of LLM platform traffic on deals.

Memory vs live audits with LLM-centric tools

Another important piece is understanding how models see your brand in their memory compared to live search:

- Memory-based knowledge comes from training data and has a cutoff date.

- Live-based knowledge comes from search augmentation when the model goes online.

Tools that run a “memory vs live” report let you:

- See how the model describes your brand from its memory.

- Compare it with a live snapshot based on current web content.

- Identify gaps, outdated positioning, and wrong competitor sets.

- Check where the model pulls its live information from (citations and sources).

This is the new way to look at your brand from an LLM’s point of view and decide what to fix first.

Keep content original with AI detectors in your flow

There is another tool category that is easy to miss: AI detectors and originality checkers.

The way LLMs generate content is probabilistic. They tend to produce the “most probable” phrase for a given context. That’s why generic, templated lines like “thank you for your time” show up everywhere. The more templated your writing is, the more synthetic it feels.

Right now, originality matters. It has high value for LLMs and for users. Even if we end up reading AI-generated content most of the time in the future, keeping your current content as original as possible is helpful.

Using AI-detecting tools in your content flow can:

- Help you spot overly synthetic passages.

- Encourage more authentic, in-context phrasing.

- Preserve a stronger “human signal” in your content.

Budget line items for Strategy 3

For 2026, Strategy 3 usually includes:

- A citation tracker subscription for AI platforms.

- A memory vs live audit tool for periodic brand-positioning checks.

- Time from analytics and RevOps to build LLM traffic dashboards and CRM reports.

- Optional AI detector tools integrated into your content workflow.

Don’t ignore media and social content

Text is only part of the story. A lot of human and AI brainpower is now focused on capabilities beyond text. LLMs can now correctly understand images and audio, and video.

Models are moving toward “world models,” where text, images, and other media types are blended in answers. As that evolves, having your media assets optimized for LLMs will become even more important.

A few practical steps you can already take:

- Treat every visual not merely as decorative but also as an answer asset.

- Use elaborate alt text and titles that actually describe what is in the image.

- Think more like an LLM: a title such as “Q&A slide of a presentation about budgeting for AI LLM search for 2026” is much more informative than a generic label.

- Apply this “GO-first image optimization” consistently across your visuals, including those you host on social platforms.

Social media indexation and video handling may not be fully accessible for most LLMs yet, but preparing your media now increases your chances of being surfaced when multimodal answers become standard.

How to think about your 2026 AI visibility budget

If you look holistically at how to optimize for LLMs, you have three big buckets to plan for:

- Be ready to be visible (technical).

- Work physically with your website.

- Make sure content is visible and indexable.

- Implement schemas and technical controls.

- Set up robots.txt and LLM.txt and fix crawlability issues.

- Be ready to optimize (GEO and content).

- Shift towards generative engine optimization principles.

- Use clear definitions, expert citations, and trusted links.

- Refresh and structure content so LLMs can scan and cite it easily.

- Keep external brand assets consistent and up to date.

- Be ready to analyze (measurement and dashboards).

- Monitor presence in ChatGPT, Perplexity, Gemini and similar platforms.

- Use citation trackers and memory vs live audits.

- Isolate AI platform traffic in your analytics and CRM.

- Measure how AI-sourced visitors behave and convert.

These three strategies feed into each other: visibility enables optimization, optimization improves citations, and measurement closes the loop and shows what works.

Where Rampiq fits into this

As a B2B-focused agency, Rampiq has been working with AI search and AI overviews for more than a year. We have:

- Completed hundreds of AI visibility audits.

- Designed and tested GEO principles in competitive B2B spaces.

- Helped brands like B2B compliance platforms grow AI overview appearances into the hundreds.

- Built and used tools for LLM visibility and citation tracking.

If you are in the middle of your budgeting exercise or just starting it, and you need clarity on how AI search fits into your 2026 plan, we offer an AI search clarity call where we:

- Look at where you are right now.

- Review your content and technical readiness.

- Talk through tools, budgets, and priorities.

- Help you plug AI search into your ongoing marketing effort.

The sooner you get ahead of this, the less “expensive” the visibility gap will be in terms of lost pipeline.