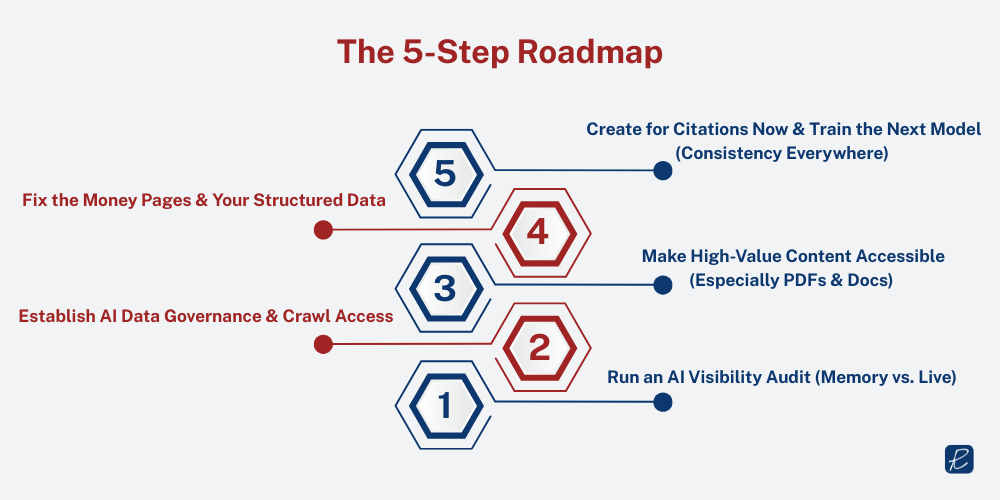

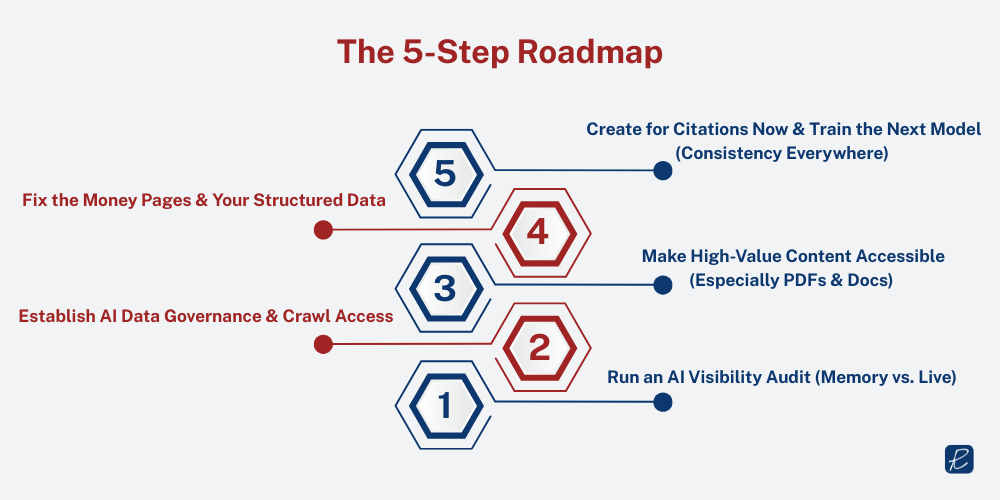

The 5-Step Roadmap

Step 1: Run an AI Visibility Audit (Memory vs. Live)

Models have two types of “memories.” The first is training-time knowledge with a cutoff date; the second kicks in when a search function is enabled. Many user sessions don’t enable live search, so the memory version can show vague, outdated, or just-wrong brand summaries and competitor sets.

If you don’t know what LLMs currently “remember” about you, you don’t know what your buyers are seeing. The gap between memory and live views reveals where to act first. Seeing the actual source list from live results is gold. Now, you know which public pages models use to form your story and who they consider your competitors.

How to do it

- Perform a memory-based audit: what the model says you do, when you were founded, and who it thinks your competitors are. If you spot tool vendors or platforms as your “competitors” instead of real peers, you’ve found a high-priority gap.

- Pull a search-enabled audit: capture the exact domains and pages models cite when search is on, then inventory inaccuracies across those sources.

Memory vs. Live: what changes and what you do next

| View |

What the model uses |

Typical issues |

Next stepcs |

| Memory |

Training-time snapshot with cutoff |

Vague positioning;

wrong competitors |

Fix public bios/footprints that feed models |

| Live |

Search-enabled augmented results |

Mixed source quality |

Identify/upgrade the source list the model cites |

Pitfalls

- Treating this like a one-and-done. Models evolve; your footprint needs to keep up.

- Only looking at your own site. The source list lives across the public web; that’s where corrections often have to be made.

When the live audit surfaces a better competitor set and links to where that info came from, it becomes “my gold… now I know where the models retrieved the information.”

Quick checklist

- Memory view captured (brand description, services, competitors).

- Live/search view captured (sources, dates, competitor set).

- Gap list created (what’s vague/wrong; which sources to fix first).

Step 2: Establish AI Data Governance & Crawl Access

LLM crawlers still behave like other bots. You can manage them with robots.txt and by ensuring your site is technically discoverable. Different AI vendors run multiple bots for different purposes.

But here’s the good news: you have levers. You can decide what LLMs see, and just as importantly, prevent accidental invisibility caused by frameworks or gating that block access to your best content.

How to do it

- Set explicit robots.txt policies for AI crawlers (OpenAI, Perplexity, Anthropic were discussed, with multiple bot types noted). Allow the sections you want learned and cited; restrict clearly sensitive paths.

- Confirm discoverability: some React/JavaScript implementations make it harder for bots to access content. Spot-check critical pages.

- Keep a simple governance doc that maps content sections to allow/deny decisions and owners.

AI Bot Governance Matrix (example structure)

| Vendor |

Bot categories (as noted) |

Our policy |

Paths / Exceptions |

Notes |

| OpenAI |

Multiple |

Allow key knowledge; deny sensitive |

/docs, /resources |

Review quarterly |

| Perplexity |

Multiple |

Allow |

/whitepapers |

Test PDF fetch |

| Anthropic |

Multiple |

Allow |

/guides |

Monitor logs |

Robots.txt starter (paste and adapt)

# Allow LLMs to learn from specific knowledge areas

User-agent: *

Allow: /docs/

Allow: /resources/

Allow: /whitepapers/

# Keep sensitive paths out

Disallow: /pricing-calculator/

Disallow: /customer-portal/

Disallow: /staging/

# Optional: point crawlers to file sitemap(s)

Sitemap: https://www.example.com/sitemap.xml

Sitemap: https://www.example.com/sitemap-files.xml

Pitfalls

- Blanket disallows that hide valuable knowledge.

- Assuming SPA pages are rendered and readable without testing.

Quick checklist

- Robots.txt rules updated for AI bots.

- SPA/JS pages tested for crawl/read access.

- Governance doc with owners and review cadence.

Step 3: Make High-Value Content Accessible (Especially PDFs & Docs)

LLMs “adore PDFs” and content that gives direct answers. In SaaS, especially, documentation sections are often the most cited assets, not the blog. You can still keep your human lead-gen funnel while making the knowledge itself available to models.

When conversations in LLMs hinge on specific how-to prompts, models favor precise, answer-first resources. If your knowledge sits behind hard gates (or is just hard to reach), you miss citations.

Action plan

- Ungate PDF libraries for LLM bots at minimum (white papers, research, collateral). You’re not required to ungate these assets for humans. This is about letting crawlers learn.

- Ensure your documentation/knowledge base is crawlable and well structured; add obvious internal links and a file sitemap so bots can find everything.

- Prioritize assets that directly answer common buyer questions in your category.

Three-lane access policy (human vs. bot)

| Lane |

Human access |

LLM access |

Examples |

Notes |

| A — Open |

Open |

Open |

Docs, how‑to, integration guides |

Fully indexable text content |

| B — Hybrid |

Gated (form) |

Open |

Whitepapers, research |

HTML summary + text‑based PDF crawlable |

| C — Closed |

Customer‑only |

Closed |

Customer‑only docs |

Publish capability abstracts instead |

Pitfalls

- Ungating nothing “just to be safe” leaves LLMs to learn from weaker third-party sources.

- Publishing scans or image-only PDFs that are unreadable.

PDF Access Policy (decision template)

| Asset type |

Human gate? |

LLM access? |

Rationale |

Implementation note |

| White papers |

Yes |

Yes |

Teach models core POV & proof |

Robots.txt allow + links |

| Research briefs |

Optional |

Yes |

Direct answers & stats |

HTML landing + PDF link |

| Product guides |

No |

Yes |

High-frequency how-to citations |

In docs + sitemap entry |

| Executive one-pagers |

Yes |

Yes |

Clear category framing |

Ensure text-based PDF |

Quick checklist

- Inventory PDFs/docs; tag by topic and intent.

- Decide human vs. LLM gating policy per asset type.

- Add internal links/file sitemap so bots can actually find files.

Step 4: Fix the Money Pages & Your Structured Data

LLMs follow the conversation’s intent and need a fast, machine-readable snapshot to decide whether to go deeper. Schema is the way to speak to crawlers in their own language. Meanwhile, “money pages” (services/solutions in your main nav) are often under-optimized around 70% of the time in long-standing B2B brands; they’re effectively abandoned.

If your high-value pages don’t instantly convey who you serve, what you do, and why you’re relevant to a query, crawlers may not dive in. You lose both citations and human clarity.

How to do it

- Add or upgrade schema/structured data: Organization, Service, Article, and Author, where applicable. This provides a quick snapshot that encourages models to read more.

- Tighten meta and on-page: make the value prop explicit, align headings to buyer intent, and surface short answers/definitions near the top.

- Link money pages to documentation and research so models (and humans) can go deeper if it matches the conversation.

Step 5: Create for Citations Now & Train the Next Model (Consistency Everywhere)

There are two games: earning citations now and shaping what the next generation of models will learn. Models pay special attention to top-tier domains and to consistent positioning across the public web.

You can’t retrain models that are already shipped because “they know what they know.” But you can influence the next refresh by being clear and consistent wherever models read from. And you can earn citations today by shipping answer-first formats that LLMs favor: lists, glossaries, summaries, definitions.

Action plan

- Build an answer library: short, precise definitions, summaries, and lists on your core topics.

- Use your internal GO guidelines to engineer content toward LLM expectations.

- Refresh public bios and company descriptors across high-visibility profiles and publications; align language to the positioning you want models to repeat.

- Plan a modest, steady cadence of placements on authoritative domains – interviews, contributed answers, recognitions, so the public record matches your desired positioning.

Pitfalls

- Chasing “AI SEO rankings in ChatGPT.” LLMs do not rank sites. Focus on citations and demand capture.

- Inconsistent descriptions of who you are and what you do across the web.

The marching orders are simple: “Be super consistent in your positioning everywhere.” And remember, “prompts are not keywords. Citations are not backlinks.”

Metrics & Measurement

You can and should measure your brand’s AI visibility. There is no longer a complete black box when it comes to measuring the effect.

How to do it

- Use Google Analytics as your first-party source of truth to see which landing pages are visited from AI-driven sources and when spikes correlate with content launches or placements.

- Build a lightweight dashboard that tracks:

- Sessions and landings for your answer-first assets (docs, definitions, research).

- Referral patterns that map to AI-related sources where visible.

- Simple overlays for audit dates and major content releases.

This is your first-party data, and it’s the right starting point to connect content to real outcomes, extending to leads and pipeline when you have the volume.

Quick checklist

- Create views that surface traffic to “answer” assets.

- Annotate with audit runs and content pushes.

- Tie to lead quality where possible.

Common mistakes and Quick fixes

| Mistake |

Why it hurts |

Quick fix |

| Blanket Disallow in robots.txt |

LLMs can’t learn from you |

Allow docs/resources; keep sensitive paths closed |

| Gating all PDFs |

Models quote third parties instead |

Publish HTML summaries + text-based PDFs |

| Thin money pages |

Crawlers don’t dive deeper |

Add Service schema, FAQs, deep links |

| Measuring nothing |

You can’t prove impact |

GA4 Answer-Asset grouping + annotations |

Tooling & Workflow Tips

- Use an audit workflow that distinguishes memory from live and exposes source domains so you can plan fixes.

- Keep robots.txt and site access under shared marketing-dev governance.

- Maintain a simple internal GO guidelines document for answer-first content.

- Use GA4 for first-party tracking; add lead attribution when volume allows.