Executive Reality Check: What Actually Changed

The biggest shift isn’t algorithmic; it’s behavioral. AI compresses the “explain this to me” layer, so fewer people click ten posts to learn the basics. But once the problem feels risky or expensive, buyers still click straight to vendors who look safest.

Two outcomes now matter most:

- Citations: your facts, charts, or definitions get quoted in AI answers.

- Recommendations: your brand appears on shortlists when someone asks “best X for Y.”

Citations build trust. Recommendations build pipeline. Your portfolio needs to earn both. Google itself keeps saying the best practices for helpful content still apply, and that mass-producing thin, AI-generated pages is still spam. Treat that as your guardrail, not your strategy.

Think of every hub as a sales enablement artifact first, an SEO page second. If an AE can’t use it tomorrow, it won’t persuade AI either.

Google’s own comms say AI Overviews/AI Mode show a wider range of sources and link outward; your job is to be the obvious, quotable, safe source to click next.

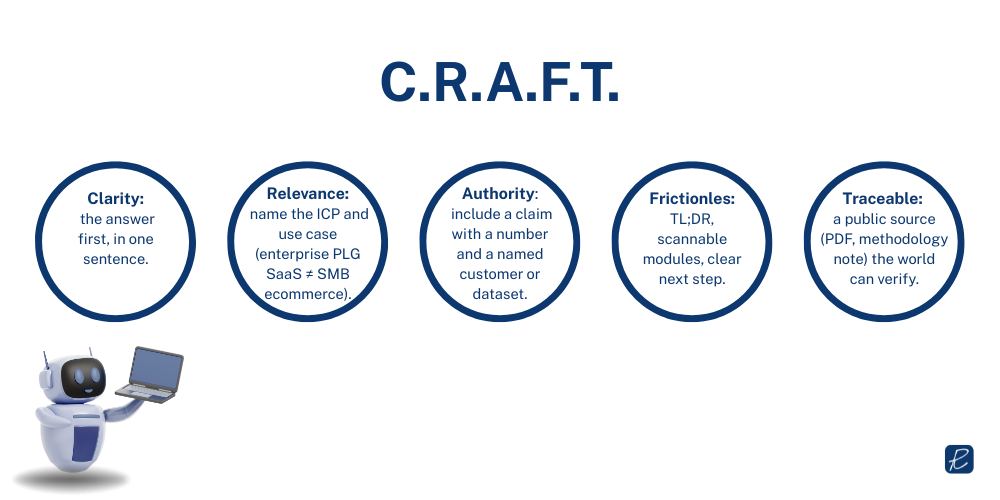

I use a mental checklist I call C.R.A.F.T. for every high-stakes page:

- Clarity: the answer first, in one sentence.

- Relevance: name the ICP and use case (enterprise PLG SaaS ≠ SMB ecommerce).

- Authority: include a claim with a number and a named customer or dataset.

- Frictionless: TL;DR, scannable modules, clear next step.

- Traceable: a public source (PDF, methodology note) the world can verify.

If a page misses any letter, it’s unlikely to be lifted, linked, or recommended.

Replace Fluff With Proof-Led Portfolio Design

Here’s the contrarian bit: most B2B sites need half as many posts and twice as much proof. A resilient 12-24-month mix looks like this:

- Commercial-intent pages (Service × Industry / Use-Case / Tech): These win clicks after an AI summary. Build each like a mini homepage: outcome-first headline, numbers, short “how we do it,” tiny FAQ written in buyer language, sensible CTA. If you can’t put a logo + number on the page, it’s not ready.

- First-party research (HTML + PDF, partially ungated): Benchmarks, before/after studies, field notes. Add a 100-word abstract and one liftable claim. Host on your domain. Make at least an abstract or summary public so it can be cited.

- Implementation content (docs, patterns, “unhappy paths”): The unsexy “how it really works” material that AI frequently cites and buyers forward internally.

- Selective thought leadership: Opinionated pieces that frame your category or call a contrarian shot, only when you have something quotable to say.

Google’s own guidance reinforces this: helpful, people-first content wins; scaled, low-value pages do not. If an asset doesn’t reduce deal risk, it’s probably not worth shipping this year.

Category Positioning & ICP Labeling

This is where recommendations are won. Models and humans both over-index on adjectives. “Enterprise,” “regulated,” “MVP,” “fintech,” “healthcare” – your job is to use the ones your ICP already uses about itself.

A few practical rules:

- Write the headline the way a buyer would:** “Enterprise demand gen for PLG SaaS,”** not “Results-driven marketing solutions.”

- Make the label consistent across surfaces (site, G2/Clutch, LinkedIn, partner directories, press bios, review prompts). A mismatch is a trust leak.

- Coach reviews to include qualifiers naturally (“mid-market fintech,” “Series B,” “HIPAA”).

Adjective discipline beats keyword lists. Pick the 3-4 labels you can defend and use them everywhere for a year.

Solution Hubs & Problem Libraries (Pages Built for Decisions)

Hubs should look like a decision page, not a blog. Lead with the answer:

“We help enterprise PLG SaaS cut CAC payback with pipeline-quality SEO in 90 days.”

Then prove it. Add a “How we do it” snapshot (three steps), a short case with numbers, a tools/stack note, and a five-question FAQ that uses your ICP’s actual words. Under each hub, create a problem library: small pages for recurring pains (“migrating to subfolders without losing traffic,” “launching a new region without cannibalization”), each with a 2-line fix and a link to the deeper doc or case.

First-Party Research Library (HTML + PDF, On-Domain)

Models quote facts. Make yours easy to find and safe to reuse. Four research archetypes work well:

- Benchmark (e.g., “Average CAC payback by motion in 2025”)

- Before/after (e.g., “Velocity lift after restructuring to /blog/”)

- Field guide (e.g., “LLM-friendly content packaging: a checklist with examples”)

- Failure analysis (e.g., “Why 38% of multi-region launches lose share in month 1”)

Each needs: a 100-word abstract, a method section someone could reproduce, and a public artifact (PDF on your domain). Gate the deep dive if you must, but leave a cite-able version ungated. That’s the “traceable” in C.R.A.F.T.

Models quote facts. Make yours easy to find and safe to reuse:

- Publish an HTML explainer with a 100-word abstract + a polished on-domain PDF.

- Include a method section someone could reproduce.

- Keep at least summaries public for citability; gate deep dives if economics demand.

Unique view: don’t publish a number you wouldn’t defend on a livestream. If it’s not reproducible, it’s forgettable (or a liability).

Documentation, Help Center & Product Content

Docs might be your highest-ROI content… if they’re written for humans.

What to include that most teams skip:

- Constraints & trade-offs (what your approach doesn’t do and why)

- Integration flight-checks (a short pre-launch list your buyer can hand to a dev)

- Unhappy paths (what breaks, symptoms, fix in one paragraph)

- Version notes that matter (what changed that affects outcomes, not UI pixels)

Perfection reads like marketing. Clear limits read like expertise. AI models and executives both trust content that admits boundaries.

Distribution That Compounds Authority

Authority happens in ecosystems, not silos. The fastest compounding loops:

- Analyst and curator relationships: Brief the 3-5 people your ICP already reads. Bring data and named customers. Ask for what you want: a quote request next time they cover your topic.

- Credible top lists & directories: Yes, mentions (even without links) still matter. Target the lists that your actual buyers bring up on calls. Offer a clean one-pager with outcomes and references; many editors will add you when you make their life easy.

Reviews with specificity: Fresh + specific beats numerous + vague. Ask for the industry, team size, problem, and outcome in plain English.

- Partner enablement: Co-create a small asset with a platform your buyers already use. Their brand is borrowed trust; your proof makes it stick.

Understand that one great relationship beats ten cold PR pitches. Treat analysts/curators like product stakeholders, not distribution channels.

How Google’s AI Impacts B2B SEO & Content Strategies

Stop writing for a hypothetical “position.” Start engineering evidence and fit. In an AI-first SERP, “topical authority” is less about volume and more about evidentiary density (named customers, defensible numbers, reproducible methods) and signal distribution in places humans and models already trust.

SERP Real Estate & CTR Dynamics Under AI Overviews/AI Mode

When an AI summary renders, fewer users click traditional results. Pew found click-through on visits with an AI summary was ~8% vs. ~15% when no summary appeared, a near 50% delta.

Industry analyses echo the trend (often 30-35% fewer clicks), especially on non-branded informational queries. Google counters that people are more satisfied and that AI experiences show a wider range of sources and links. All can be true at once: fewer, but better-qualified clicks, if your page is the next obvious step.

What that means in practice:

- Answer-first packaging: (TL;DR, bold claim, scannable proof) so your snippet feels indispensable after a summary.

- Named-entity clarity: Company, product, industry, compliance, etc., to match shortlist prompts.

- Linkable artifacts: Public abstracts like on-domain PDFs the AI can comfortably cite.

- Avoid scaled fluff: Google’s spam policy on scaled content still applies, AI or not.

Don’t fight AI summaries; design for the click that still happens, which is straight to a decision page with proof.

Operating Model & Governance

Strategy dies without process. A lightweight way to keep this moving:

- SME time is sacred: one 60-minute block per week per pillar. Don’t let it slip.

- Two-step reviews: content edit today, legal/brand sign-off tomorrow. The SLA is the strategy.

- Red team review: once a month, someone tries to falsify your claims. If they can, fix the page or pull it.

- Content deprecation: if a claim is out of date and unfixable, unpublish or archive the page with a clear note. Stale proof is worse than no proof.

Proof velocity is a leading indicator. Count “new, named, cite-able facts shipped this month.” If that number’s rising, your program’s healthy.

Measurement When Rankings Blur

You won’t get a single magic metric. You will get a tight bundle that points one way.

Build a dashboard with four dials:

- Inclusion & shortlist signals: mentions in credible lists/roundups, analyst quotes, review velocity. Expect more “zero-click” behavior overall, so external signals matter.

- Page-level behavior that screams “commercial intent”: growth in Top Viewed (not just Landing) for pricing, demo, and service pages; higher click-through from research/docs to sales actions. (Think “fewer clicks, stronger clicks.”)

- Dark-traffic triangulation: correlate direct/“(not set)” lifts with server-log pings from bots and a branded-query uptick. Multiple independent analyses in 2025 documented lower CTR with AI summaries; you’re watching for compensating brand pull and downstream behavior.

- Revenue instrumentation: opportunities influenced by commercial pages or research; win-rate and cycle-time movement in ICP deals.

Remember to add an AE usage rate—how often reps paste your pages/research into emails. Humans are the best ranking algorithm you’ll ever have.